PyTorch-Direct: Introducing Deep Learning Framework with GPU-Centric Data Access for Faster Large GNN Training | NVIDIA On-Demand

Accessible Multi-Billion Parameter Model Training with PyTorch Lightning + DeepSpeed | by PyTorch Lightning team | PyTorch Lightning Developer Blog

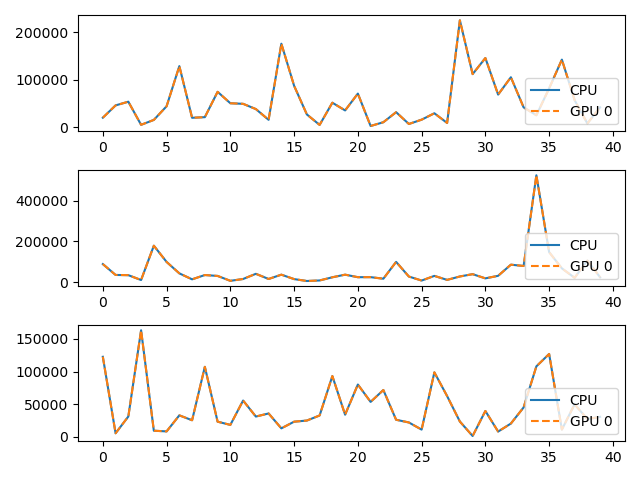

PyTorch MNIST example spawns multiple processes in the same GPU · Issue #287 · horovod/horovod · GitHub